|

Listen to this article

|

Boston Dynamics has turned its Spot quadruped, typically used for inspections, into a robot tour guide. The company integrated the robot with ChatGPT and other AI models as a proof of concept for the potential robotics applications of foundational models.

In the last year, we’ve seen huge advances in the abilities of Generative AI, and much of those advances have been fueled by the rise of large Foundation Models (FMs). FMs are large AI systems that are trained on a massive dataset.

These FMs typically have millions of billions of parameters and were trained by scraping raw data from the public interested. All of this data gives them the ability to develop Emergent Behaviors, or the ability to perform tasks outside of what they were directly trained on, allowing them to be adapted for a variety of applications and act as a foundation for other algorithms.

The Boston Dynamics team spent the summer putting together some proof-of-concept demos using FMs for robotic applications. The team then expanded on these demos during an internal hackathon. The company was particularly interested in a demo of Spot making decisions in real-time based on the output of FMs.

Large language models (LLMs), like ChatGPT, are basically very capable autocomplete algorithms, with the ability to take in a stream of text and predict the next bit of text. The Boston Dynamics team was interested in LLMs’ ability to roleplay, replicate culture and nuance, form plans, and maintain coherence over time. The team was also inspired by recently released Visual Question Answering (VQA) models that can caption images and answer simple questions about them.

A robotic tour guide seemed like the perfect demo to test these concepts. The robot would walk around, look at objects in the environment, and then use a VQA or captioning model to describe them. The robot would also use an LLM to elaborate on these descriptions, answer questions from the tour audience, and plan what actions to take next.

In this scenario, the LLM acts as an improv actor, according to the Boston Dynamics team. The engineer provides it a broad strokes scrip and the LLM fills in the blanks on the fly. The team wanted to play into the strengths of the LLM, so they weren’t looking for a perfectly factual tour. Instead, they were looking for entertainment, interactivity, and nuance.

Learn from Agility Robotics, Amazon, Disney, Teradyne and many more.

Learn from Agility Robotics, Amazon, Disney, Teradyne and many more.

Turning Spot into a tour guide

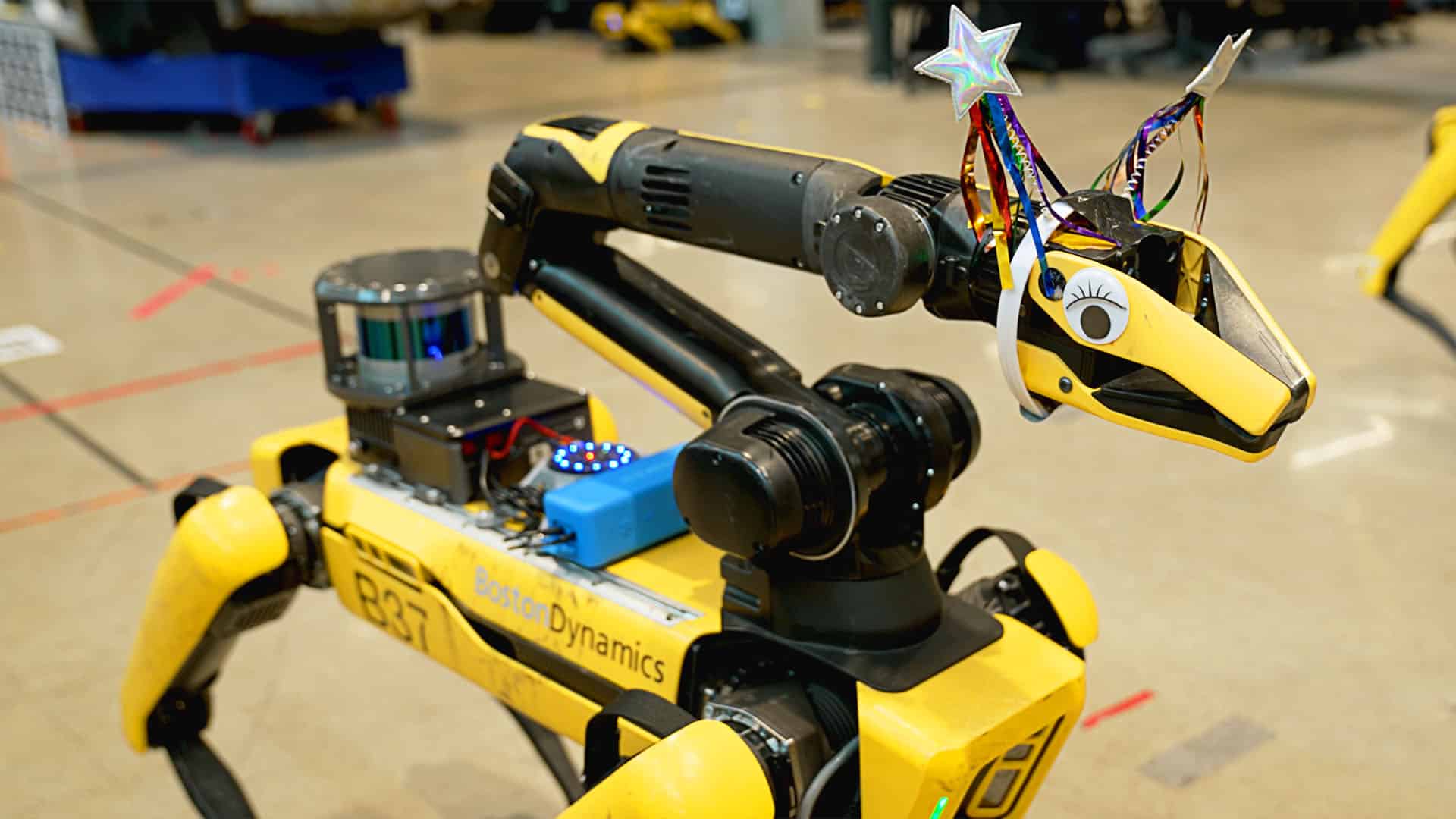

The hardware setup for the Spot tour guide. 1. Spot EAP 2; 2. Reseaker V2; 3. Bluetooth Speaker; 4. Spot Arm and gripper camera. | Source: Boston Dynamics

The demo that the team planned required Spot to be able to speak to a group and hear questions and prompts from them. Boston Dynamics 3D printed a vibration-resistant mount for a Respeaker V2 speaker. They attached this to Spot’s EAP 2 payload using a USB.

Spot is controlled using an offboard computer, either a desktop PC or a laptop, which uses Spot’s SDK to communicate. The team added a simple Spot SDK service to communicate audio with the EAP 2 payload.

Now that Spot had the ability to handle audio, the team needed to give it conversation skills. They started with OpenAI’s ChaptGPT API on gpt-3.5, and then upgraded to gpt-4 when it became available. Additionally, the team did tests on smaller open-source LLMs.

The team took inspiration from research at Microsoft and prompted GPT by making it appear as though it was writing the next line in a Python script. They then provided English documentation to the LLM in the form of comments and evaluated the output of the LLM as though it were Python code.

The Boston Dynamics team also gave the LLM access to its SDK, a map of the tour site with 1-line descriptions of each location, and the ability to say phrases or ask questions. They did this by integrating a VQA and speech-to-text software.

They fed the robot’s gripper camera and front body camera into BLIP-2, and ran it in either visual question answering mode or image captioning mode. This runs about once a second, and the results are fed directly into the prompt.

To give Spot the ability to hear, the team fed microphone data in chunks to OpenAI’s whisper to convert it into English text. Spot waits for a wake-up word, like “Hey, Spot” before putting that text into the prompt, and it suppresses audio when it its speaking itself.

Because ChatGPT generates text-based responses, the team needed to run these through a text-to-speech tool so the robot could respond to the audience. The team tried a number of off-the-shelf text-to-speech methods, but they settled on using the cloud service ElevenLabs. To help reduce latency, they also streamed the text to the platform as “phrases” in parallel and then played back the generated audio.

The team also wanted Spot to have more natural-looking body language. So they used a feature in the Spot 3.3 update that allows the robot to detect and track moving objects to guess where the nearest person was, and then had the robot turn its arm toward that person.

Using a lowpass filter on the generated speech, the team was able to have the gripper mimic speech, sort of like the mouth of a puppet. This illusion was enhanced when the team added costumes or googly eyes to the gripper.

How did Spot perform?

The team gave Spot’s arm a hat and googly eyes to make it more appealing. | Source: Boston Dynamics

The team noticed new behavior emerging quickly from the robot’s very simple action space. They asked the robot, “Who is Marc Raibert?” The robot didn’t know the answer and told the team that it would go to the IT help desk and ask, which it wasn’t programmed to do. The team also asked Spot who its parents were, and it went to where the older versions of Spot, the Spot V1 and Big Dog, were displayed in the office.

These behaviors show the power of statistical association between the concepts of “help desk” and “asking a question,” and “parents” with “old.” They don’t suggest the LLM is conscious or intelligent in a human sense, according to the team.

The LLM also proved to be good at staying in character, even as the team gave it more absurd personalities to try out.

While the LLM performed well, it did frequently make things up during the tour. For example, it kept telling the team that Stretch, Boston Dynamics’ logistics robot, is for yoga.

Moving forward, the team plans to continue exploring the intersection of artificial intelligence and robotics. To them, robotics provides a good way to “ground” large foundation models in the real world. Meanwhile, these models also help provide cultural context, general commonsense knowledge, and flexibility that could be useful for many robotic tasks.

Tell Us What You Think!