|

Listen to this article

|

While humanoid robots have burst into mainstream attention in the past year, and more and more companies have released their own models, many operate similarly. The typical humanoid uses arms and grippers to handle objects, and their rigid legs provide a mode of transportation. Researchers at the Toyota Research Institute, or TRI, said they want to take humanoids a step further with the Punyo robot.

Punyo isn’t a traditional humanoid robot in that it doesn’t yet have legs. So far, TRI‘s team is working with just the torso of a robot and developing manipulation skills.

“Our mission is to help people with everyday tasks in our homes and elsewhere,” said Alex Alspach, one of TRI’s tech leads for whole-body manipulation, in a video (see above). “Many of these manipulation tasks require more than just our hands and fingers.”

When humans have to carry a large object, we don’t just use our arms to carry it, he explained. We might lean the object against our chest to lighten the load on our arms and use our backs to push through doors to reach our destination.

Manipulation that uses the whole body is tricky for humanoids, where balance is a major issue. However, the researchers at TRI designed its robot to do just that.

“Punyo does things differently. Taking advantage of its whole body, it can carry more than it could simply by pressing with outstretched hands,” added Andrew Beaulieu, one of TRI’s tech leads for whole-body manipulation. “Softness, tactile sensing, and the ability to make a lot of contact advantageously allow better object manipulation.”

TRI said that the word “punyo” is a Japanese word that elicits the image of a cute yet resilient robot. TRI’s stated goal was to create a robot that is soft, interactive, affordable, safe, durable, and capable.

Learn from Agility Robotics, Amazon, Disney, Teradyne and many more.

Learn from Agility Robotics, Amazon, Disney, Teradyne and many more.

Robot includes soft limbs with internal sensors

Punyo’s hands, arms, and chest are covered with compliant materials and tactile sensors that allow it to feel contact. The soft materials allow the robot’s body to conform with the objects it’s manipulating.

Underneath it are two “hard” robot arms, a torso frame, and a waist actuator. TRI says it aimed to combine the precision of a traditional robot with the compliance, impact resistance, and sensing simplicity of soft robotic systems.

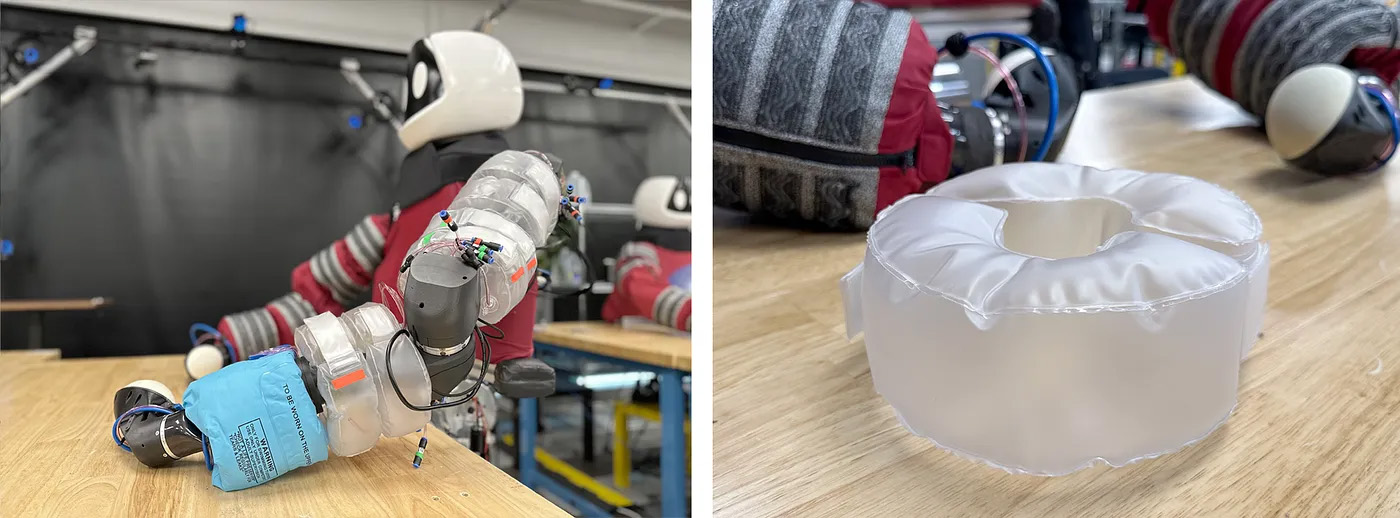

The entirety of Punyo’s arms are covered in air-filled bladders or bubbles. These bubbles connect via a tube to a pressure sensor. This sensor can feel forces applied to the outer surfaces of the bubble.

Each bubble can also be individually pressurized to a desired stiffness, and add around 5 cm of compliance to the surface of the robot’s arms.

Instead of traditional grippers, Punyo has “paws” made up of a single high-friction latex bubble with a camera inside. The team printed the inside of these bubbles with a dot pattern. The camera watches for deformities in this pattern to estimate forces.

Left: Under Punyo’s sleeves are bubbles, air tubes, and pressure sensors that add compliance and tactile sensing to the arms. Right: Closeup of a pair of arm bubbles. | Source: Toyota Research Institute

Punyo learns to use full-body manipulation

Punyo learned contact-rich policies using two methods: diffusion policy and example-guided reinforcement learning. TRI announced its diffusion policy method last year. With this method, the robot uses human demonstrations to learn robust sensorimotor policies for hard-to-model tasks.

Example-guided reinforcement learning is a method that requires tasks to be modeled in simulation and with a small set of demonstrations to guide the robot’s exploration. TRI said it uses this kind of learning to achieve robust manipulation policies for tasks it can model in simulation.

When the robot can see demonstrations of these tasks it can more efficiently learn them. It also gives TRI team more room to influence the style of motion the robot uses to achieve the task.

The team uses adversarial motion priors (AMP), which are traditionally used for stylizing computer-animated characters, to incorporate human motion imitation into its reinforcement pipeline.

Reinforcement learning does require the team to model tasks in simulation for training. To do this, TRI used a model-based planner for demonstrations instead of teleoperation. It called this process “plan-guided reinforcement learning.”

TRI claimed that using a planner makes longer-horizon tasks that are difficult to teleoperate possible. The team can also automatically generate any number of demonstrations, reducing its pipeline’s dependence on human input. This moves TRI closer to scaling up the number of tasks tha tPunyo can handle.

Very interesting approach… Excited to see a different way of thinking about these interactions.