|

Listen to this article

|

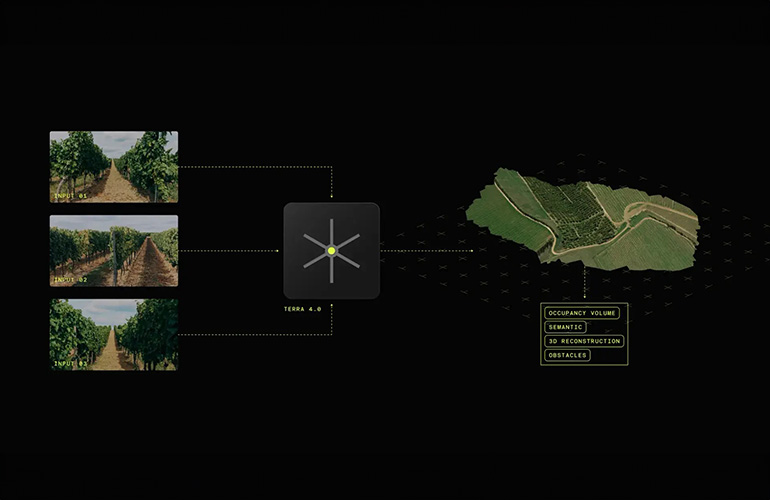

Stereolabs Inc. today introduced Terra AI as the latest addition to its vision portfolio. The new platform uses monocular and stereo vision to provide 4D volumetric perception, according to the company.

“As we further collaborate with manufacturers and system integrators, our latest Terra AI technology aims to address a growing need for a more open, adaptable, and scalable vision platform delivering 360-degree perception and driving true autonomy,” stated Cecile Schmollgruber, CEO of Stereolabs. “Terra AI will allow companies across industries to invest in autonomous robotics at scale, helping them run their operations with greater efficiency and precision at lower cost.”

Stereolabs provides 3D depth and motion-sensing systems based on stereo vision and artificial intelligence. The company, which has offices in New York, San Francisco, and Paris, said its hardware and software stacks and ecosystem support developers of robots, drones, and vehicles.

Learn from Agility Robotics, Amazon, Disney, Teradyne and many more.

Learn from Agility Robotics, Amazon, Disney, Teradyne and many more.

Stereolabs designs for simultaneous image processing

Industries ranging from agriculture and construction to logistics and more are under pressure to increase and accelerate production, even as they contend with labor shortages, noted Stereolabs. Such pressure can lead to unsafe working conditions, but robots can alleviate the problems — if they can evaluate their surroundings, interpret data, and complete tasks independently, it said.

“Advanced sensing technology is crucial for unlocking those capabilities, and Terra AI is answering the call,” Stereolabs asserted.

Vision-based full autonomy for robots requires multiple tasks to be performed simultaneously and efficiently on embedded hardware, said the company. They include depth estimation for spatial awareness, visual-inertial odometry for indoor and outdoor localization, semantic understanding for obstacle classification, terrain mapping, and robust detection of people surrounding the robot for safety.

Conventional sensing technology can only process images from one or two cameras at a time, said Stereolabs. Terra AI is designed to perform depth, localization, semantic, obstacle detection, and fusion from multiple cameras in both space and time. The company said it can process images from six to eight front, back, and surround cameras for autonomous systems.

“This new technology represents a leap forward in the ability for autonomous robotics to perceive space,” it added.

Jetson and Terra AI offer affordable perception

Stereolabs said its vision platform combines Terra AI with a high-performance NVIDIA Jetson module, as well as software and the ZED stereo and new mono cameras.

Terra AI is a large Neural Network Architecture that Stereolabs said uses fast, memory-efficient, and low-level code to handle images simultaneously on low-power embedded computers. This enables the processing of high-frequency, high-volume data from ZED cameras on an embedded NVIDIA Jetson platform.

Terra AI also provides a scalable and affordable way to use low-cost cameras with NVIDIA’s Jetson Orin GPU to provide a 360-degree view around autonomous machines, said Stereolabs. With the enhanced perception, tractors, mowers, utility vehicles, autonomous mobile robots (AMRs), and robotic arms can safely navigate and manipulate without expensive radar or lidar, it said.

ZED SDK support to come

Terra AI is now in research preview. It will be available to Stereolabs Enterprise customers in the second quarter 2024 and later on to all users via the ZED software development kit (SDK). More than 100,000 developers worldwide use the ZED SDK for systems that operate around the clock, said the company.

The ZED SDK can scale from entry-tier, front-camera applications to higher levels of automation that require comprehensive surround-view camera applications, said Stereolabs.

It added that the SDK supports flexible deployment options, allowing for a common implementation of features and requirements across machine types.

Tell Us What You Think!